How to pick the wrong music for soundtracks: choose by the lyrics.

A very common trend in many recent soundtracks: to choose its music tracks according to song lyrics – which, by axiom, requires the use of songs. That, unbeknownst to many, are not the only musical form (interesting, isn’t it? Because “song” is commonly used, since more or less a century ago, as standard definition for music piece. A topic probably worth another post).

One example, amongst the many (that I’ll keep anonymous, out of bon ton – albeit series aficionados might be able to spot it): I’ve recently watched a TV series with an episode containing a very important scene with the death of a woman, whose soundtrack was the well known song “Ain’t no sunshine when she’s gone” – even though (and this is the main problem), emotionally speaking, it didn’t connect with the scene. At all. Because, here’s the problem: lyrics are not music. Lyrics are words – another, completely autonomous, reality. So autonomous that you can make art just out of lyrics: it’s called poetry.

Music has an emotional content on its own, indipendent from lyrics.

Lyrics might have words matching the context shown. But music, the actual sounds that compose it, are not granted to do the same.

This is the very same reason because you don’t have to learn to listen to music: you just do it. You can listen to music never heard before, with instruments you’ve never seen, and done by people you’ve never met, and like it – something that, for those who want to, happens on a daily basis. Same cannot be said of lyrics: you have to know the language and sociological context. Share grammar, syntax, glossary, culture… There has to be lot of shared common grounds, so to make verbal communication possible. Because, again: language is abstract symbols, that receive meaning only when you share enough cultural context to understand what they refer to. Music is not: music is an experience – like watching a sunset or the smell of freshly baked bread. You don’t need somebody to explain what it is: you just feel it.

The problem is rooted in how those who choose the music in these occasions don’t have music knowledge – and by “music knowledge” I don’t mean being updated about who’s at the top of the latest Spotify chart or trending on Google searches: I mean, given paper, pen and piano, being able to write a symphony. Or a Hard Rock piece, or a Reggae one – able to make music.

Google at the resque.

So, in the moment they were given the task to choose which music to have, they were disarmed: where to look for? Because, to pick something from a colossal topic such as music, to make an informed choice, you have to understand how it works. A bit like when your car suddenly stops, you open the hood, and all you see is a grovel of mechanical things: chaos. You have no idea what’s what, and how to figure out a solution – whereas, when your car repairer does that, he knows what he is looking at. And how to make sense of it, and fix it – he knows how to make order out of chaos (a treat for all of you Jungians out there).

So, back again to music: should I use violins? Maybe better plucked strings – a guitar? Maybe an electric one? How many strings…6? 7? 8? What tuning? Or maybe better synthesizers – analog? Analog modular? Digital? Digitally stabilized analog? Maybe virtual? …virtual analog, or software ROMplers (can we truly call them synthesizers, by the way?)? And so forth – and I could go on for a very long time, with all the infinite possibilities. A very simple task for someone who understands orchestration, composition, instrumental practice and all that makes music – but for someone who doesn’t? Hieroglyphics. Uncharted territory.

So, how to hack it? Simple: lyrics.

“This project is an epic movie with medieval-like imagery mixed with futuristic science fiction, with strong references to Norse mythology”

“Ah, simple: let’s Google what songs have lyrics about Norse mythology – there it is! Led Zeppelin!”

Even though: does Led Zeppelin’s music style matches well with epicness, futuristic science fiction or medieval imagery…? Not at all. (This is another example, by the way)

This also opens to another very interesting topic: why are not musicians chiming in to help? It’s not that the world doesn’t like music anymore: everyone likes music – even those who don’t know yet they do. They just need to show up and say: “This is what I do for a living: let me help you!”.

In conclusion

Whenever you have to choose music for a soundtrack, choose, first of all, music. Lyrics are a nice optional.

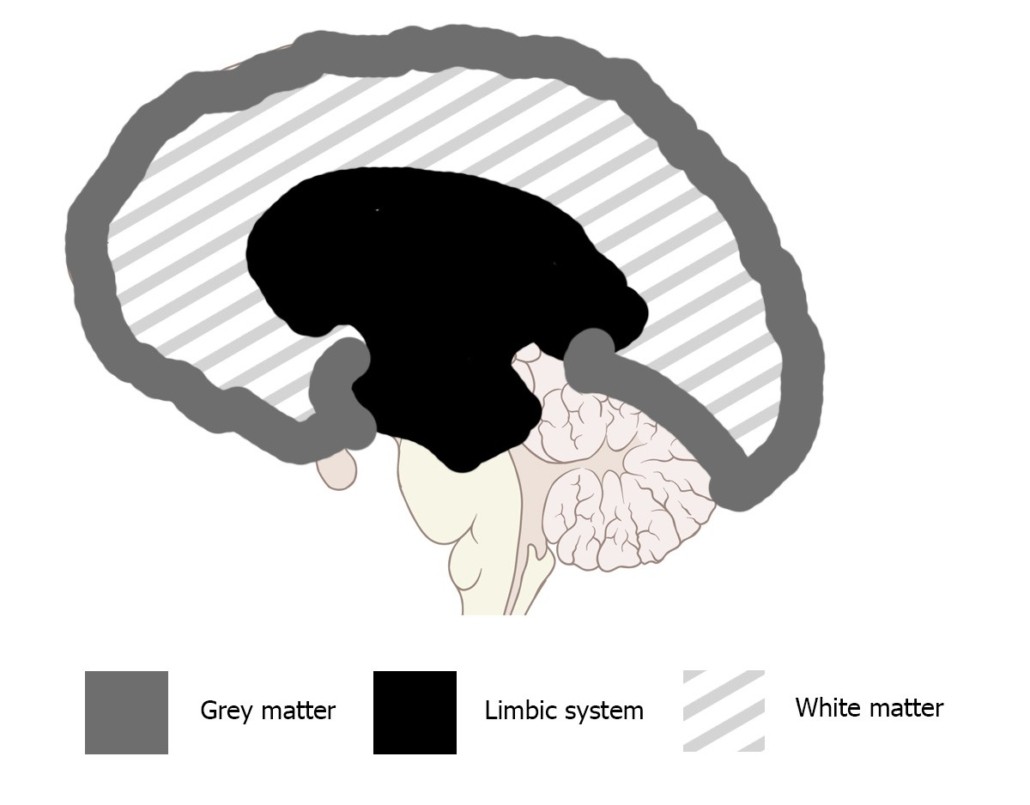

If you don’t do so, there’s no amount of apologies or rationalizations that are gonna fix what you’ve broken: your brain is the one who likes music – whether you’d want it, or not. Reason because I’ve made an example about sunsets and fresh bread smell: those are direct neural inputs, that give you back feelings – they bypass entirely your cognitive side. Just like music does. Reason because brains are surprisingly good at picking good music – until we decide to mess with them, of course (which is, more or less, same principle I’ve spoken about in this other article of mine: https://www.linkedin.com/pulse/difference-between-rational-social-purchase-lorenzo-lmk-magario/ ).